You're reading the Keybase blog.

There are more posts.

When you're done, you can install Keybase.

In end-to-end (E2E) encrypted chat apps, users take charge of their own keys. When users lose their keys, they need to reset their accounts and start from scratch.

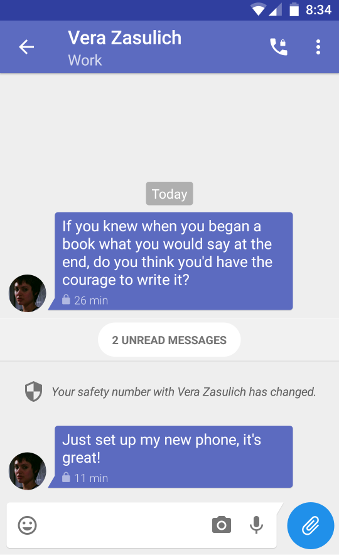

Account "resets" are dangerous. After a reset, you clear your public keys, and you become a cryptographic stranger in all your conversations. You must now reestablish identity, and in almost all cases, this means meeting in person and comparing "safety numbers" with every last one of your contacts. How often do you find yourself skipping this check, even though there can be no safety against a man-in-the-middle attack without it?

Even if you are serious about safety numbers, you might only see your chat partners once a year at a conference, so you're stuck.

But it's infrequent, right?

How often do resets happen? Answer: if you're using most encrypted chat apps, all the freaking time.

With those apps, you throw away the crypto and just start trusting the server: (1) whenever you switch to a new phone; (2) whenever any partner switches to a new phone; (3) when you factory-reset a phone; (4) when any partner factory-resets a phone, (5) whenever you uninstall and reinstall the app, or (6) when any partner uninstalls and reinstalls. If you have just dozens of contacts, resets will affect you every few days.

Resets happen regularly enough that these apps make it look like no big deal:

Is this really TOFU?

In cryptography, the term TOFU ("Trust on first use") describes taking a gamble the first time 2 parties talk. Rather than meeting in person, you just trust a party in the middle to vouch for each side...and then, after the initial introduction, each side carefully tracks the keys to make sure nothing has changed. If a key has changed, each side sounds the alarm.

Similarly, in SSH, if a remote host's key changes, it doesn't "just work," it gets downright belligerent:

@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@

@ WARNING: REMOTE HOST IDENTIFICATION HAS CHANGED! @

@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@

IT IS POSSIBLE THAT SOMEONE IS DOING SOMETHING NASTY!

Someone could be eavesdropping on you right now (man-in-the-middle attack)!

It is also possible that a host key has just been changed.

The fingerprint for the RSA key sent by the remote host is

00:11:22:33:44:55:66:77:88:99:aa:bb:cc:dd:ee:ff

Please contact your system administrator.

Add correct host key in /Users/rmueller/.ssh/known_hosts to get rid of this message.

Offending RSA key in /Users/rmueller/.ssh/known_hosts:12

RSA host key for 8.8.8.8 has changed and you have requested strict checking.

Host key verification failed.

This is the right answer. And make no mistake: TOFU isn't TOFU if it lets you keep going with a cute little shield that flows by. You should be seeing a giant skull and crossbones.

Undoubtedly these chat services would argue that it's good enough because the user is warned, and a user could notice and check the safety numbers if they wanted. Here's why we disagree:

- Checking is infeasible, since it happens way too often.

- Checking sucks.

- Even a cursory poll of our security-conscious friends shows that no one bothers.

- Therefore it's just server-trust and SMS trust (ewww!) over and over and over again.

- Finally, these apps don't have to work this way. Especially on device upgrades and additions. The common, happy case could be both smoother and safer; the more rare, unhappy case could be appropriately scarier. We'll get to Keybase's solution in a minute.

Let's stop calling this TOFU

There's a very effective attack here. Let's say Eve wants to break into Alice and Bob's existing conversation, and can get in the middle between them. Alice and Bob have been in contact for years, having long ago TOFU'ed.

Eve simply makes it look to Alice that Bob bought a new phone:

Bob (Eve): Hey Hey

Alice: Yo Bob! Looks like you got new safety numbers.

Bob (Eve): Yeah, I got the iPhone XS, nice phone, I'm really happy with it. Let's exchange safety numbers at RWC 2020. Hey - do you have Caroline's current address? Gonna surprise her while I'm in SF.

Alice: Bad call, Android 4 life! Yeah 555 Cozy Street.

So to call most E2E chat systems TOFU is far too generous. It's more like TADA — Trust After Device Additions. This is a real, not articifical, problem, as it it creates an opportunity for malicious introductions into pre-existing conversation. In real TOFU, by the time someone is interested in your TOFU conversation, they can't break in. With TADA, they can.

In group chats, the situation is even worse. The more people in the chat, the more common resets will be. In a company of just 20 people, it'll happen every 2 weeks or so, from our estimate. And every person in the company will need to go meet that person. In person. Otherwise, the entire chat is compromised by one mole or hacker.

A Solution

Is there a good solution, one that doesn't involve trusting servers with private keys? At Keybase, we think yes: true multi-device support. This means that you control a chain of devices, which are you. When you get a new device (a phone, a laptop, a desktop, an iPad, etc.), it generates its own key pair, and your previous device signs it in. If you lose a device, you "remove" it from one of your remaining devices. Technically this removal is a revocation, and there's also some key rotation that happens automatically in this case.

The net result is that you don't need to trust the server or meet in person when a partner or teammate gets a new device. Similarly, you don't need to trust the server or meet in person when they remove a device, unless it was their last. The only time you need to see a warning is when someone truly loses access to all their installs. And in that case, you're met with a serious warning, the way it should be:

The result is far fewer account resets. Historically on Keybase, the total number of device additions and revocations is ten times the number of account resets (you don't have to take our word on this, it's public in our Merkle Tree). Unlike other apps, we can show a truly horrifying warning when you converse with someone who has recently reset.

Device-to-device provisioning is a tricky engineering operation that we have iterated on a few times. An existing device signs the new device's public keys, and encrypts any important secret data for the new device's public key. This operation has to happen quickly (within a second), since that's about the user's attention span for this sort of hoop. As a result, Keybase uses a key hierarchy so that by transferring 32 bytes of secret data from the old device to the new, the new device can see all long-lived cryptographic data (see the FAQ below for more details). This might seem slightly surprising, but this is exactly the point of cryptography. Cryptography doesn't solve your secret management problems, it just makes them way more scalable.

The Full Security Picture

We can now motivate the four bedrock security properties we have baked into Keybase's app:

- long-lived secret keys never leave the devices they were created on

- full multi-device support keeps account resets to a minimum

- key revocations cannot be maliciously withheld or rolled-back

- forward secrecy supported via time-based exploding messages

The first two should look familiar. The third becomes important in a design where device revocations are expected and normal. The system must have checks to make sure that malicious servers cannot withhold revocations, which we've written about before.

See our blog post on exploding (ephemeral) messaging for more details about the fourth security property.

Lots of New Crypto; Did Keybase Get It Right?

These core Keybase security properties weren't available in an off-the-shelf implementation, an RFC, or even an academic paper. We had to invent some of the crypto protocols in-house, though fortunately, off-the-shelf, standardized and heavily-used cryptographic algorithms sufficed in all cases. All of our client code is open source and in theory anyone can find design or implementation bugs in our clients. But we wanted to shine a spotlight on the internals, and we hired a top security audit firm to take a deep dive.

Today we announce NCC Group's report, and that we are extremely encouraged by their results. Keybase invested over $100,000 in the audit, and NCC Group staffed top-tier security and cryptography experts on the case. They found two important bugs, both in our implementation, and both that we quickly fixed. These bugs were only exploitable if our servers were acting maliciously. We can assure you they weren't, but you have no reason to believe us. That's the whole point!

We think the NCC team did an excellent job. Kudos to them for taking the time to deeply understand our design and implementation. The bugs they found were subtle, and our internal team failed to find them, despite many recent visits to those parts of the codebase. We encourage you to read the report here, or to read on further in our FAQ below.

Cheers!

💖 Keybase

FAQ

How DARE you attack Project XYZ?

We are no longer saying anything negative about any cryptography projects here.

What else?

We're proud of the fact that Keybase doesn't require phone numbers, and for people who care, the app can also cryptographically verify Twitter, HackerNews, Reddit, and Github proofs, in case that's how you know someone.

And...coming very soon....support for Mastodon.

What about phone porting attacks?

Many apps are susceptible to "phone-porting attacks." Eve visits a kiosk in a shopping mall and convinces Bob's mobile phone provider to point Bob's phone number to her device. Or she convinces a rep over the phone. Now Eve can authenticate to the chat servers, claiming to be Bob. The result looks like our Alice, Bob and Eve example above, but Eve doesn't need to infiltrate any servers. Some apps offer "registration locks" to protect against this attack, but they're annoying so off by default.

I heard Keybase sends some encrypted private keys to the server?

In our early days (2014 and early 2015), Keybase was a web-based PGP app, and since there were few better options, we had a feature for users to store their PGP private keys on our servers, encrypted with their passphrases (which Keybase didn't know).

Starting in September 2015, we introduced Keybase's new key model. PGP keys are never (and never have been been) used for Keybase chat or filesystem.

How are old chats instantly available on new phones?

In some other apps, new devices do not see old messages, as syncing old messages through a server would contravene forward-secrecy. The Keybase app allows users to designate some messages—or entire conversations—as "ephemeral." These messages explode after a set time and get doubly-encrypted: once with long-lived chat encryption keys, and again with eagerly-rotated and discarded ephemeral keys. Thus, ephemeral messages get forward secrecy and cannot be synced across phones on provisioning.

Non-ephemeral messages persist until the user explicitly deletes them and are E2E synced to new devices. So when you add someone to a team, or add a new device for yourself, the messages are unlocked.

See the next question for details about how that syncing actually happens.

Tell me about PUK's!

It's pronounced "puks" not "pukes."

Two years ago, we introduced per-user keys (PUK). A PUK's public half is advertised in users' public sigchain. The private half is encrypted for each device's public key. When Alice provisions a new device, her old device knows the secret half of her PUK and the new device's public key. She encrypts the secret half of the PUK for new device's public key, and the new device pulls this ciphertext down via the server. The new device decrypts the PUK and can immediately access all long-lived chat messages.

Whenever Alice revokes a device, the revoking device rotates her PUK, so that all her devices except the most-recently revoked device get the new PUK.

There is crucial distinction between this syncing scheme and the early Keybase PGP system. Here, all keys involved have 32 bytes of true entropy and aren't breakable by brute force in the case of server compromise. True, if Curve25519 is broken, or if the PRNG that Go exposes is broken, then this system is broken. But PUK-syncing doesn't make any other meaningful cryptographic assumptions.

What about big group chats?

tl;dr teams have their own auditable signature chains, assigning role changes and member additions and removals.

Security researchers have written about "ghost user" attacks on group chats. If users' clients can't cryptographically verify group membership, then malicious servers can inject spies and moles into group chats. Keybase has a very robust story here in the form of our teams feature, which we will revisit in a future blog post.

Can you talk more about NCC-KB2018-001?

We believe this bug to be the most significant find of the NCC audit. Keybase makes heavy use of append-only immutable data structures to defend against server equivocation. In the case of a bug, an honest server might want to equivocate. It might need to say: "I previously told you A, but there was a bug, I meant B." But our clients have a blanket policy to not allow the server that flexibility. So instead, when we do hit bugs, we typically need to hardcode exceptions into the clients.

We also recently introduced Sigchain V2 which solves scalability problems we didn't accurately foresee in V1. With V2 sigchains, the clients are more sparing in which cryptographic data they pull down from the server, and only bother to fetch one signature over the tail of the signature chain, rather than a signature for every intermediate link. Thus, clients lost the ability to always look up the hash of the signature of a chainlink, but we previously used those hashes to lookup bad chainlinks in that list of hardcoded exceptions. The PR to introduce Sigchain V2 forged ahead, forgetting this detail about hardcoded exceptions since it was buried under multiple layers of abstraction, and just trusted a field from the server's reply for what that hash was.

Once NCC uncovered this bug, the fix was easy enough: lookup hardcoded exceptions by the hash of the chainlink, rather than the hash of the signature over the chainlink. A client can always directly compute these hashes.

We can also attribute this bug to added complexity required to support both sigchain V1 and sigchain V2 simultaneously. Modern clients write sigchain V2 links, but all clients need to support legacy V1 links indefinitely. Recall that clients sign links with their private per-device keys. We cannot coordinate all clients rewriting historical data in any reasonable time frame, since those clients might just be offline.

Can you talk more about NCC-KB2018-004?

As in 001 (see just above), we were burned by some combination of

support for legacy decisions and optimizations that

seemed important as we got more operational experience with the system.

In Sigchain V2, we shave bytes off of chainlinks to decrease the bandwidth required to lookup users. These savings are especially important on mobile. Thus, we encode chainlinks with MessagePack rather than JSON, which gives a nice savings. In turn, clients sign and verify signatures over these chainlinks. The researchers at NCC found clever ways to cook up signature bodies that looked like JSON and MessagePack at the same time, and could thus say conflicting things. We unwittingly introduced this decoding ambiguity while optimizing, when we switched JSON parsers from Go's standard parser to one that we found performed much better. This faster parser was happy to skip over a lot of leading garbage before it found actual JSON, which enabled this "polyglot attack." This bug was fixed with some extra input validation.

With Sigchain V2, we also adopted Adam Langley's suggestion that signers prefix their signature payloads

with a context string prefix and \0-byte, so that verifiers don't get confused

about a signer's intent. There were bugs on the verifying side

of this context-prefix idea, which could have led to other "polyglot" attacks.

We quickly fixed this flaw with a whitelist.

For both bugs under 004, the server rejected the "polyglot" payloads that

NCC constructed, so attacking these weaknesses would only have been possible

with an assist from a compromised server.

Where are your docs?

We're investing more time into our docs in the coming months.

Can you talk more about this claim by NCC? "However, the attacker is capable of refusing to update a sigchain or rolling back a user's sigchain to a previous state by truncating later chain links."

Keybase makes heavy use of immutable, append-only public data structures, which force its server infrastructure to commit to one true view of users' identities. In this way, we can guarantee device revocations and team member removals in such a way that a compromised server cannot roll back. If the server decides to show an inconsistent view, this deviation becomes part of an immutable public record. Keybase clients or a third party auditor can discover the inconsistency at any time after the attack. We think these guarantees far exceed those of competing products, and are near-optimal given the practical constraints of mobile phones and clients with limited processing power.

To be clear Keybase cannot invent signatures for someone; like any server, it could withhold data. But our transparent merkle tree is designed to keep this to a very a short period, and permanently discoverable.

How does Keybase handle account resets?

When Keybase users actually lose all of their devices (as opposed to just adding new ones or revoking some subset), they need to reset. After an account reset, a user is basically new, but has the same username as before. The user cannot sign a "reset statement" because she has lost all of her keys (and therefore needs a reset). So instead, the Keybase server commits an indelible statement into the Merkle Tree that signifies a reset. Clients enforce that these statements cannot be rolled back. A future blog post will detail the exact mechanisms involved.

That user needs to then redo any identity proofs (Twitter, Github, whatever) with their new keys.

Can the server just swap out someone's Merkle Tree Leaf to advertise a whole different set of keys?

The NCC authors consider an evil Keybase server that performs a wholesale

swap-out of a Merkle Tree leaf, replacing Bob's true set of keys with a

totally new fake set. A server attempting this attack has two options. First,

it can fork the state of the world, putting Bob on one side of the fork, and

those it wants to fool on the other. And second, it can "equivocate",

publishing a version of the Merkle Tree with Bob's correct set of keys, and other

versions with the faked set. Users who interact with Bob regularly will detect

this attack, since they will check that previously-loaded versions of Bob's

history are valid prefixes of the newer versions they download from the server.

Third-party validators who scan the entirety of every Keybase update could also

catch this attack. If you have read all the way down here, and you'd be interested

in writing a third-party Keybase validator, we'd love that,

and we can offer a sizeable commission for one that works. Reach out to

max on Keybase.

Otherwise, we hope to schedule the creation of a standalone validator soon.

Can you believe I made it to the end?

Did you though, or did you just scroll down here?

This is a post on the Keybase blog.

- Keybase joins Zoom

- New Cryptographic Tools on Keybase

- Introducing Keybase bots

- Dealing with Spam on Keybase

- Keybase SSH

- Stellar wallets for all Keybase users

- Keybase ♥'s Mastodon, and how to get your site on Keybase

- Cryptographic coin flipping, now in Keybase

- Keybase exploding messages and forward secrecy

- Keybase is now supported by the Stellar Development Foundation

- New Teams Features

- Keybase launches encrypted git

- Introducing Keybase Teams

- Abrupt Termination of Coinbase Support

- Introducing Keybase Chat

- Keybase chooses Zcash

- Keybase Filesystem Documents

- Keybase's New Key Model

- Keybase raises $10.8M

- The Horror of a 'Secure Golden Key'

- Error Handling in JavaScript